R5.1 Proof that exponentiation of Transverse of a Matrix equals the Transverse of the Exponentiation Expansion

On our honor, we did this problem on our own, without looking at the solutions in previous semesters or other online solutions.

Given

and

-

![{\displaystyle {\underset {\color {red}n\times n}{\exp[\mathbf {A} ]}}={\underset {\color {red}n\times n}{\mathbf {I} }}+{\underset {\color {red}n\times n}{{\frac {1}{1!}}\mathbf {A} }}+{\underset {\color {red}n\times n}{{\frac {1}{2!}}\mathbf {A} ^{2}}}+....={\underset {\color {red}n\times n}{\sum _{k=0}^{\infty }{\frac {1}{k!}}\mathbf {A} ^{k}}}}](../../dfb7911053443f9a547043a4e054268c7852eda8.svg) |

|

(Eq.(2)p.20-2b)

|

-

|

|

(Eq.5.1.1)

|

Solution

We will first expand the LHS, then the RHS of (Eq. 5.1.1) using (Eq.(2)p.20-2b) and compare the two expressions.

Expanding the LHS,

![{\displaystyle {\underset {\color {red}n\times n}{\exp[\mathbf {A^{T}} ]}}={\underset {\color {red}n\times n}{\mathbf {I^{T}} }}+{\underset {\color {red}n\times n}{{\frac {1}{1!}}\mathbf {A^{T}} }}+{\underset {\color {red}n\times n}{{\frac {1}{2!}}[\mathbf {A^{T}} ]^{2}}}+....={\underset {\color {red}n\times n}{\sum _{k=0}^{\infty }{\frac {1}{k!}}\mathbf {[} {A^{T}}]^{k}}}}](../../6fb15d08e41aa66e8fb1fa8a240cf3ada9d0cd18.svg)

But we know that

-

![{\displaystyle \therefore {\underset {\color {red}n\times n}{\exp[\mathbf {A^{T}} ]}}={\underset {\color {red}n\times n}{\mathbf {I} }}+{\underset {\color {red}n\times n}{{\frac {1}{1!}}\mathbf {A^{T}} }}+{\underset {\color {red}n\times n}{{\frac {1}{2!}}[\mathbf {A^{T}} ]^{2}}}+....={\underset {\color {red}n\times n}{\sum _{k=0}^{\infty }{\frac {1}{k!}}\mathbf {[} {A^{T}}]^{k}}}}](../../ad4129b624df01e192fb6d47229a4f03b61b06ca.svg) |

|

(Eq.5.1.2)

|

Now expanding the RHS,

![{\displaystyle {\underset {\color {red}n\times n}{\exp[\mathbf {A} ]^{T}}}=\left[{\underset {\color {red}n\times n}{\mathbf {I} }}+{\underset {\color {red}n\times n}{{\frac {1}{1!}}\mathbf {A} }}+{\underset {\color {red}n\times n}{{\frac {1}{2!}}[\mathbf {A} ]^{2}}}+....={\underset {\color {red}n\times n}{\sum _{k=0}^{\infty }{\frac {1}{k!}}\mathbf {[} {A}]^{k}}}\right]^{T}}](../../94da8a14d7bd04ee1cbf8149b52d4faa781c3463.svg)

Which on calculating, reduces to

![{\displaystyle {\underset {\color {red}n\times n}{\exp[\mathbf {A^{T}} ]}}={\underset {\color {red}n\times n}{\mathbf {I^{T}} }}+{\underset {\color {red}n\times n}{{\frac {1}{1!}}\mathbf {A^{T}} }}+{\underset {\color {red}n\times n}{{\frac {1}{2!}}[\mathbf {A^{T}} ]^{2}}}+....={\underset {\color {red}n\times n}{\sum _{k=0}^{\infty }{\frac {1}{k!}}\mathbf {[} {A^{T}}]^{k}}}}](../../6fb15d08e41aa66e8fb1fa8a240cf3ada9d0cd18.svg)

or

-

![{\displaystyle {\underset {\color {red}n\times n}{\exp[\mathbf {A^{T}} ]}}={\underset {\color {red}n\times n}{\mathbf {I} }}+{\underset {\color {red}n\times n}{{\frac {1}{1!}}\mathbf {A^{T}} }}+{\underset {\color {red}n\times n}{{\frac {1}{2!}}[\mathbf {A^{T}} ]^{2}}}+....={\underset {\color {red}n\times n}{\sum _{k=0}^{\infty }{\frac {1}{k!}}\mathbf {[} {A^{T}}]^{k}}}}](../../8443f003e3600ba9b4347e46c05a8bea1f93b624.svg) |

|

(Eq.5.1.3)

|

Comparing (Eq. 5.1.2) and (Eq. 5.1.3)

We conclude the LHS = RHS, Hence Proved.

R5.2. Exponentiation of a Complex Diagonal Matrix [2]

On our honor, we did this problem on our own, without looking at the solutions in previous semesters or other online solutions.

Given

A Diagonal Matrix

-

![{\displaystyle \displaystyle \mathbf {D} ={\text{Diag}}[d_{1},d_{2},d_{3},....,d_{n}]}](../../304c5979894a4a4083e322804309efa37f51ebc4.svg) , where,  . |

|

(Eq.(2)p.20-2b)

|

Problem

Show that

-

![{\displaystyle \displaystyle \exp[\mathbf {D} ]={\text{Diag}}[e^{d_{1}},e^{d_{2}},e^{d_{3}},....,e^{d_{n}}]}](../../2682012c86dc1b5f202150f33440843d19d769c1.svg) , where, ![{\displaystyle \exp[\mathbf {D} ]\in \mathbb {C} ^{n\times n}}](../../db33ca3cb6d7e14adbb81677bfe2e92171a08dbb.svg) . |

|

(Eq.(3)p.20-2b)

|

Solution

We know, from Lecture Notes [3],

-

![{\displaystyle {\underset {\color {red}n\times n}{\exp[\mathbf {A} ]}}={\underset {\color {red}n\times n}{\mathbf {I} }}+{\underset {\color {red}n\times n}{{\frac {1}{1!}}\mathbf {A} }}+{\underset {\color {red}n\times n}{{\frac {1}{2!}}\mathbf {A} ^{2}}}+....={\underset {\color {red}n\times n}{\sum _{k=0}^{\infty }{\frac {1}{k!}}\mathbf {A} ^{k}}}}](../../dfb7911053443f9a547043a4e054268c7852eda8.svg) |

|

(Eq.(2)p.15-3)

|

Let us consider a Simple yet Generic 4x4 Complex Diagonal Matrix

-

|

|

(Eq.5.2.2)

|

where  .

.

Applying (Eq.(2)p.15-3) to (Eq.5.2.2) and expanding,

-

![{\displaystyle \exp[\mathbf {D} ]=\underbrace {\begin{bmatrix}1&0&0&0\\0&1&0&0\\0&0&1&0\\0&0&0&1\end{bmatrix}} _{\color {blue}{\text{Matrix 1}}}+{\frac {1}{1!}}\underbrace {\begin{bmatrix}ai&0&0&0\\0&bi&0&0\\0&0&ci&0\\0&0&0&di\end{bmatrix}} _{\color {blue}{\text{Matrix 2}}}+{\frac {1}{2!}}\underbrace {{\begin{bmatrix}ai&0&0&0\\0&bi&0&0\\0&0&ci&0\\0&0&0&di\end{bmatrix}}*{\begin{bmatrix}ai&0&0&0\\0&bi&0&0\\0&0&ci&0\\0&0&0&di\end{bmatrix}}} _{\color {blue}{\text{Matrix 3}}}+\ldots +{\frac {1}{k!}}\underbrace {{\begin{bmatrix}ai&0&0&0\\0&bi&0&0\\0&0&ci&0\\0&0&0&di\end{bmatrix}}*\ldots *{\begin{bmatrix}ai&0&0&0\\0&bi&0&0\\0&0&ci&0\\0&0&0&di\end{bmatrix}}} _{\color {blue}{\text{multiply k times - Matrix k}}}}](../../ccdcdc83d748043fe2e918d29ec4d4070046be4f.svg) |

|

(Eq.5.2.3)

|

Simplifying Term 2 and other higher power terms (upto Term k) in the following way,

-

|

|

(Eq.5.2.4)

|

Similarly,

-

|

|

(Eq.5.2.5)

|

Using (Eq.5.2.4) and (Eq.5.2.5) in (Eq.5.2.3) and carrying out simple matrix addition, we get,

-

![{\displaystyle exp[\mathbf {D} ]={\begin{bmatrix}(1+{\frac {1}{1!}}(ai)+{\frac {1}{2!}}(ai)^{2}+\ldots +{\frac {1}{k!}}(ai)^{k}&0&0&0\\0&(1+{\frac {1}{1!}}(bi)+{\frac {1}{2!}}(bi)^{2}+\ldots +{\frac {1}{k!}}(bi)^{k})&0&0\\0&0&(1+{\frac {1}{1!}}(ci)+{\frac {1}{2!}}(ci)^{2}+\ldots +{\frac {1}{k!}}(ci)^{k})&0\\0&0&0&(1+{\frac {1}{1!}}(di)+{\frac {1}{2!}}(di)^{2}+\ldots +{\frac {1}{k!}}(di)^{k})\end{bmatrix}}}](../../58cade2f57574279e0870a8ebd6669d69b88fddc.svg) |

|

(Eq.5.2.6)

|

But every diagonal term of the matrix is of the form,

-

|

|

(Eq.5.2.7)

|

Therefore, (Eq.5.2.6) can be rewritten as,

-

![{\displaystyle exp[\mathbf {D} ]={\begin{bmatrix}exp(ai)&0&0&0\\0&exp(bi)&0&0\\0&0&exp(ci)&0\\0&0&0&exp(di)\end{bmatrix}}={\begin{bmatrix}e^{ai}&0&0&0\\0&e^{bi}&0&0\\0&0&e^{ci}&0\\0&0&0&e^{di}\end{bmatrix}}}](../../fa7f37ff534c0982f318acf3b5c1cca4a6d14e0e.svg) |

|

(Eq.5.2.8)

|

are nothing but the diagonal elements of the original matrix in (Eq.5.2.2). Hence,

are nothing but the diagonal elements of the original matrix in (Eq.5.2.2). Hence,

-

![{\displaystyle \displaystyle \exp[\mathbf {D} ]={\text{Diag}}[e^{d_{1}},e^{d_{2}},e^{d_{3}},e^{d_{4}}]}](../../5a8be73e384048e1a362bbd95fa86273f2a63699.svg) |

|

(Eq.5.2.9)

|

Similarly it can be easily found for an  complex diagonal matrix that

complex diagonal matrix that

-

![{\displaystyle \displaystyle \exp[\mathbf {D} ]={\text{Diag}}[e^{d_{1}},e^{d_{2}},e^{d_{3}},....,e^{d_{n}}]}](../../2682012c86dc1b5f202150f33440843d19d769c1.svg) |

|

(Eq.5.2.10)

|

Hence Proved.

On our honor, we did this problem on our own, without looking at the solutions in previous semesters or other online solutions.

Given

A matrix  can be decomposed as

can be decomposed as

-

|

|

(Eq.(2) p.20-4)

|

where

is the diagonal matrix of eigenvalues of matrix

is the diagonal matrix of eigenvalues of matrix

-

![{\displaystyle \displaystyle \mathbf {\Lambda } ={\text{Diag}}[\lambda _{1},\lambda _{2},\ldots ,d_{n}]\in \mathbb {C} ^{n\times n}}](../../f2163c2e9347800d879ad5c9cf10654197e710de.svg) |

|

(Eq.(5) p.20-3)

|

and

is the matrix established by n linearly independent eigenvectors

is the matrix established by n linearly independent eigenvectors  of matrix

of matrix  , that is,

, that is,

-

![{\displaystyle \displaystyle \mathbf {\Phi } =[{\boldsymbol {\phi }}_{1},{\boldsymbol {\phi }}_{2},\ldots ,{\boldsymbol {\phi }}_{n}]\in \mathbb {C} ^{n\times n}}](../../292407df5e6aa518047337521aa9817c65b57d2a.svg) |

|

(Eq.(2) p.20-3)

|

Problem

Show that

![{\displaystyle \displaystyle \exp[\mathbf {A} ]=\mathbf {\Phi } \,{\text{Diag}}[\,e^{\lambda _{1}},\,e^{\lambda _{2}},\ldots ,\,e^{\lambda _{n}}]\mathbf {\Phi } ^{-1}}](../../2e6bca542a5c8e16f29f53c7e2364c36a2728a3c.svg)

Solution

The power series expansion of exponentiation of matrix  in terms of that matrix has been given as

in terms of that matrix has been given as

-

![{\displaystyle \displaystyle \exp[\mathbf {A} ]=\mathbf {I} +{\frac {1}{1!}}\mathbf {A} +{\frac {1}{2!}}\mathbf {A} ^{2}+{\frac {1}{3!}}\mathbf {A} ^{3}+\cdots =\sum _{k=0}^{\infty }{\frac {1}{k!}}\mathbf {A} ^{k}}](../../79268f0f002158c3ceba8c6f4267f135ae00e513.svg) |

|

(Eq.(2) p.15-3)

|

Since matrix  can be decomposed as,

can be decomposed as,

-

|

|

(Eq.(2) p.20-4)

|

Expanding the  power of matrix

power of matrix  yields

yields

-

|

|

(Eq.5.3.1)

|

Where the factors  which are neighbors of factors

which are neighbors of factors  can be all cancelled in pairs, that is,

can be all cancelled in pairs, that is,

-

|

|

(Eq.5.3.2)

|

-

|

|

(Eq.5.3.3)

|

-

|

|

(Eq.5.3.4)

|

Thus, the equation (Eq. 5.3.1) can be expressed as

-

![{\displaystyle \displaystyle \exp[\mathbf {A} ]=\sum _{k=0}^{\infty }{\frac {1}{k!}}[\mathbf {A} ^{k}]=\sum _{k=0}^{\infty }{\frac {1}{k!}}[\mathbf {\Phi } \,\mathbf {\Lambda } ^{k}\mathbf {\Phi } ^{-1}]}](../../5f25b0294f260d0242da774afffd7cf7208c1ffb.svg) |

|

(Eq.5.3.5)

|

-

![{\displaystyle \displaystyle \Rightarrow \exp[\mathbf {A} ]=\mathbf {\Phi } \,\sum _{k=0}^{\infty }{\frac {1}{k!}}[\mathbf {\Lambda } ^{k}]\mathbf {\Phi } ^{-1}}](../../e0a1081f6f66b397e787ff22b1f1eb091e9aa73e.svg) |

|

(Eq.5.3.6)

|

According to the equation (Eq.(2) p.15-3), now we have,

-

![{\displaystyle \displaystyle \exp[\mathbf {A} ]=\mathbf {\Phi } \,\exp[\mathbf {\Lambda } ]\mathbf {\Phi } ^{-1}}](../../5a1d1eb3876f3d3437495cba394e8c118d7cfa76.svg) |

|

(Eq.5.3.7)

|

Referring to the conclusion obtained in R5.2, which is

-

![{\displaystyle \displaystyle \exp[\mathbf {D} ]={\text{Diag}}[\,e^{d_{1}},\,e^{d_{2}},\ldots ,\,e^{d_{n}}]}](../../0aa2effa2f0cc5896028ea20ec6e2aa7b78152ab.svg) |

|

(Eq.(3) p.20-2b)

|

Replacing the matrix  with

with  , the elements

, the elements  with

with  ,where

,where  and then substituting into (Eq. 5.3.7) yields

and then substituting into (Eq. 5.3.7) yields

-

![{\displaystyle \displaystyle \exp[\mathbf {A} ]=\mathbf {\Phi } \,{\text{Diag}}[\,e^{\lambda _{1}},\,e^{\lambda _{2}},\ldots ,\,e^{\lambda _{n}}]\mathbf {\Phi } ^{-1}}](../../2e6bca542a5c8e16f29f53c7e2364c36a2728a3c.svg) |

|

(Eq.5.3.8)

|

On our honor, we did this problem on our own, without looking at the solutions in previous semesters or other online solutions.

Given

Exponentiation of a matrix  can be decomposed as

can be decomposed as

-

![{\displaystyle \displaystyle \exp[\mathbf {A} ]=\mathbf {\Phi } \,{\text{Diag}}[\,e^{\lambda _{1}},\,e^{\lambda _{2}},\ldots ,\,e^{\lambda _{n}}]\mathbf {\Phi } ^{-1}}](../../2e6bca542a5c8e16f29f53c7e2364c36a2728a3c.svg) |

|

(Eq.(3) p.20-4)

|

The matrix  is defined in lecture note

is defined in lecture note

-

|

|

(Eq.(1) p.20-2)

|

Problem

Show

-

|

|

(Eq.(1) p.20-5)

|

and

-

![{\displaystyle \displaystyle \displaystyle \exp[\mathbf {B} t]={\begin{bmatrix}\cos t&-\sin t\\\sin t&\cos t\end{bmatrix}}\neq {\begin{bmatrix}1&e^{-t}\\e^{t}&1\end{bmatrix}}}](../../f75f8d6d27fce1b8996b4fe65cd036a40805c8c6.svg) |

|

(Eq.(2) p.20-5)

|

Solution

To show the equation (Eq.(1) p.20-5), we should first find eigenvalues  of matrix

of matrix  using the matrix equation as follow, indroduce

using the matrix equation as follow, indroduce  to represent Identity matrix.

to represent Identity matrix.

Since the two eigenvalues of matrix  are both obtained, now solve for the corresponding two eigenvectors.

are both obtained, now solve for the corresponding two eigenvectors.

-

|

|

(Eq.5.4.1)

|

Thus, for the first value of  , we have

, we have

-

|

|

(Eq.5.4.2)

|

Substituting  into the equations above and solving yields, for the eigenvalue

into the equations above and solving yields, for the eigenvalue  , that

, that

-

|

|

(Eq.5.4.3)

|

Similarly, we have the equation which can be used for solving eigenvector corresponding to  ,

,

-

|

|

(Eq.5.4.4)

|

Substituting  into the equations above and solving yields, for the eigenvalue

into the equations above and solving yields, for the eigenvalue  , that

, that

-

|

|

(Eq.5.4.5)

|

Now we have obtained two eigenvectors  and

and  of matrix

of matrix  , where

, where

-

,  |

|

(Eq.5.4.6)

|

Thus we have

-

![{\displaystyle \displaystyle \mathbf {\Phi } =[{\boldsymbol {\phi }}_{1},{\boldsymbol {\phi }}_{2}]={\begin{bmatrix}1&1\\-i&i\end{bmatrix}}}](../../a28d479df6b5e9c5fb68b7e6bb19c2354843e21b.svg) |

|

(Eq.5.4.7)

|

Then, calculating the inverse matrix of matrix  yields

yields

-

|

|

(Eq.5.4.8)

|

Therefore we reach the conclusion that,

-

|

|

(Eq.(1) p.20-5)

|

According to the conclusion we have reached in R5.3, we have,

![{\displaystyle \displaystyle \exp[\mathbf {B} ]=\mathbf {\Phi } \,{\text{Diag}}[\,e^{\lambda _{1}},\,e^{\lambda _{2}}]\mathbf {\Phi } ^{-1}}](../../7fa45cda27eec7c6ba7d40b79fd23912ad08c85d.svg)

![{\displaystyle \displaystyle \Rightarrow \exp[\mathbf {B} t]={\begin{bmatrix}1&1\\-i&i\end{bmatrix}}{\begin{bmatrix}e^{i}&0\\0&e^{-i}\end{bmatrix}}{\begin{bmatrix}i&-1\\i&1\end{bmatrix}}{\frac {t}{2i}}}](../../4a0bcba98d9c223ac0c9967c0e484d9a464253f6.svg)

![{\displaystyle \displaystyle \Rightarrow \exp[\mathbf {B} t]={\begin{bmatrix}1&1\\-i&i\end{bmatrix}}{\begin{bmatrix}e^{it}&0\\0&e^{-it}\end{bmatrix}}{\begin{bmatrix}i&-1\\i&1\end{bmatrix}}{\frac {1}{2i}}}](../../95eb03f90369ae310b9702623f8987e29071fba1.svg)

Doing the multiplication of matrices at the right side of equation above yields

![{\displaystyle \displaystyle \exp[\mathbf {B} t]={\begin{bmatrix}e^{it}&e^{-it}\\-ie^{it}&ie^{-it}\end{bmatrix}}{\begin{bmatrix}i&-1\\i&1\end{bmatrix}}{\frac {1}{2i}}}](../../41ff5529c7515e98ad311d5583af132fa5377b80.svg)

![{\displaystyle \displaystyle \Rightarrow \exp[\mathbf {B} t]={\begin{bmatrix}ie^{it}+ie^{-it}&e^{-it}-e^{it}\\e^{it}-e^{-it}&-ie^{-it}+ie^{it}\end{bmatrix}}{\frac {1}{2i}}}](../../472c9b8cbaf40ee63f371fbfd97cc0f9897df964.svg)

-

![{\displaystyle \displaystyle \Rightarrow \exp[\mathbf {B} t]={\begin{bmatrix}{\frac {1}{2}}(e^{it}+e^{-it})&{\frac {1}{2i}}(e^{-it}-e^{it})\\{\frac {1}{2i}}(e^{it}-e^{-it})&{\frac {1}{2}}(e^{it}+e^{-it})\end{bmatrix}}}](../../cd46a62f16815976701a5b8c51c0fbcf15b6487e.svg) |

|

(Eq.5.4.9)

|

Consider Euler’s Formula,[4]

-

|

|

(Eq.5.4.10)

|

Replacing  with

with  yields

yields

-

|

|

(Eq.5.4.11)

|

Solve (Eq.5.4.10) together with (Eq.5.4.11), we have

-

|

|

(Eq.5.4.12)

|

Substituting (Eq.5.4.12) into (Eq.5.4.9) yields

-

![{\displaystyle \displaystyle \exp[\mathbf {B} t]={\begin{bmatrix}\cos t&-\sin t\\\sin t&\cos t\end{bmatrix}}}](../../fd7a9f0238fe9004168e1c307c2f7838abedaac8.svg) |

|

(Eq.(2) p.20-5)

|

Obviously,

R*5.5 Generating a class of exact L2-ODE-VC [5]

On our honor, we did this problem on our own, without looking at the solutions in previous semesters or other online solutions.

Given

A L2-ODE-VC [6]:

-

|

|

(Eq. 5.5.1)

|

The first intregal  can also be expressed as:

can also be expressed as:

-

|

|

(Eq. 5.5.2)

|

Show that(Eq. 5.5.1) and (Eq. 5.5.2) lead to a general class of exact L2-ODE-VC of the form:

-

|

|

(Eq. 5.5.3)

|

Solution

Nomenclature

Derivation of Eq. 5.5.3

The first exactness condition for L2-ODE-VC: [8]

-

|

|

(Eq. 5.5.4)

|

From (Eq. 5.5.1) and (Eq. 5.5.4), we can infer that

-

|

|

(Eq. 5.5.5)

|

Integrating (Eq. 5.5.5), w.r.t p, we obtain:

-

|

|

(Eq. 5.5.6)

|

Partial derivatives of  w.r.t to x and y can be written as:

w.r.t to x and y can be written as:

-

|

|

(Eq. 5.5.7)

|

-

|

|

(Eq. 5.5.8)

|

Substituting the partial derivatives of  w.r.t x,y and p [(Eq. 5.5.7), (Eq. 5.5.8), (Eq. 5.5.6)] into (Eq. 5.5.4), we obtain:

w.r.t x,y and p [(Eq. 5.5.7), (Eq. 5.5.8), (Eq. 5.5.6)] into (Eq. 5.5.4), we obtain:

-

|

|

(Eq. 5.5.8)

|

Comparing (Eq. 5.5.8) with (Eq. 5.5.1), we can write:

-

|

|

(Eq. 5.5.9)

|

Thus

Integrating w.r.t x,

-

|

|

(Eq. 5.5.10)

|

Substituting the  obtained in (Eq. 5.5.10) back into the expression for

obtained in (Eq. 5.5.10) back into the expression for  obtained in (Eq. 5.5.6), we obtain:

obtained in (Eq. 5.5.6), we obtain:

-

|

|

(Eq. 5.5.11)

|

The partial derivative of  (Eq. 5.5.11) w.r.t y,

(Eq. 5.5.11) w.r.t y,

-

|

|

(Eq. 5.5.12)

|

But from (Eq. 5.5.1) and (Eq. 5.5.2), we see that  .

.

So,

Since,  is only a function of

is only a function of  , so, we can now say that

, so, we can now say that  and

and  .

.

Thus  is a constant.

is a constant.

Hence we obtain the following expression for  :

:

-

|

|

(Eq. 5.5.13)

|

which represents a general class of Exact L2-ODE-VC.

R*5.6 Solving a L2-ODE-VC[9]

On our honor, we did this problem on our own, without looking at the solutions in previous semesters or other online solutions.

Given

-

|

|

(Eq. 5.6.1)

|

Problem

1. Show that (Eq. 5.6.1) is exact.

2. Find

3. Solve for

Solution

Nomenclature

The exactness conditions for N2-ODE (Non Linear Second Order Differential Equation) are:

First Exactness condition

For an equation to be exact, they must be of the form

-

|

|

(Eq. 5.6.2)

|

-

|

|

(Eq. 5.6.3)

|

Second Exactness Condition

-

|

|

(Eq. 5.6.4)

|

-

|

|

(Eq. 5.6.5)

|

Work

We have

Where we can identify

and

Thus the equation satisfies the first exactness condition.

For the second exactness condition, we first calculate the various partial derivatives of f and g.

Substituting the values in (Eq. 5.6.4) we get

Therefore the first equation satisfies.

Substituting the values in (Eq. 5.6.5) we get

Therefore the second equation satisfies as well.

Thus the second exactness condition is satisfied and the given differential equation is exact.

Now, we have

Integrating w.r.t. p, we get

where h(x,y) is a function of integration as we integrated only partially w.r.t. p.

-

|

|

(Eq. 5.6.6)

|

Partially differentiating (Eq. 5.6.6) w.r.t x

Partially differentiating (Eq. 5.6.6) w.r.t y

From equation (Eq. 5.6.3), we have

We have established that

Comparing the two equations, we get,

On integrating,

Thus,

Thus we have

This N1-ODE can be solved using the Integrating Factor Method that we very well know.

![{\displaystyle \displaystyle y={\frac {1}{e^{\int {\frac {x^{2}}{cosx}}.dx}}}\int \left[e^{\int {\frac {x^{2}}{cosx}}.dx}{\frac {k}{cosx}}dx\right]+c}](../../a6f15b0708bf1cf0c8e1488a19e2d6f74d47b374.svg)

R*5.7 Show equivalence to symmetry of second partial derivatives of first integral[11]

On our honor, we did this problem on our own, without looking at the solutions in previous semesters or other online solutions.

Given

-

|

|

(Eq.(1) p.22-3)

|

where

-

|

|

(Eq.(3) p.21-7)

|

Problem

Show equivalence to symmetry of mixed second partial derivatives of first integral, that is

where

Solution

-

|

|

(Eq.(3) p.21-7)

|

-

|

|

(Eq.(2) p.22-4)

|

From (Eq.(2)p.22-4), we have,

![{\displaystyle \displaystyle {\frac {d}{dx}}g_{1}={\frac {d}{dx}}[\phi _{xp}+\phi _{yp}y'+\phi _{pp}y'']+{\frac {d\phi _{y}}{dx}}}](../../b29e918da2f4f3d6812317875ce3bfc5462f6c8e.svg)

-

![{\displaystyle \displaystyle \Rightarrow {\frac {d}{dx}}g_{1}={\frac {d}{dx}}[\phi _{xp}+\phi _{yp}p+\phi _{pp}y'']+{\frac {d}{dx}}({\frac {\partial \phi }{\partial y}})}](../../393df03e8a773c82fe23ec986a887e7e0e0a55db.svg) |

|

(Eq. 5.7.1)

|

-

![{\displaystyle \displaystyle {\frac {d^{2}}{dx^{2}}}g_{2}={\frac {d}{dx}}[\phi _{px}+\phi _{py}y'+\phi _{pp}y'']}](../../54e73b09b84f5e4b19d24b795611b073f0c0b104.svg) |

|

(Eq.(3) p.22-4)

|

Substituting (Eq.(3)p.21-7),(Eq. 5.7.1) and (Eq.(3)p.22-4) into (Eq.(1)p.22-3) yields

![{\displaystyle \displaystyle {\frac {\partial }{\partial y}}({\frac {d\phi }{dx}})-{\frac {d}{dx}}[\phi _{xp}+\phi _{yp}y'+\phi _{pp}y'']-{\frac {d}{dx}}({\frac {\partial \phi }{\partial y}})+{\frac {d}{dx}}[\phi _{px}+\phi _{py}y'+\phi _{pp}y'']=0}](../../286ee8e4b42148850a906db4f712672b4ea15877.svg)

-

![{\displaystyle \displaystyle \Rightarrow {\frac {\partial }{\partial y}}({\frac {d\phi }{dx}})-{\frac {d}{dx}}({\frac {\partial \phi }{\partial y}})+{\frac {d}{dx}}[(\phi _{px}-\phi _{xp})+(\phi _{py}-\phi _{yp})p]=0}](../../95619f7f6ef172c28b54255a74257dcbee00bc41.svg) |

|

(Eq. 5.7.2)

|

Because

![{\displaystyle \displaystyle {\frac {d}{dx}}({\frac {\partial \phi }{\partial y}})=[{\frac {\partial }{\partial x}}({\frac {\partial \phi }{\partial y}})+{\frac {\partial }{\partial y}}({\frac {\partial \phi }{\partial y}}){\frac {dy}{dx}}+{\frac {\partial }{\partial y'}}({\frac {\partial \phi }{\partial y}}){\frac {dy'}{dx}}]=\phi _{yx}+\phi _{yy}{\frac {dy}{dx}}+\phi _{yp}{\frac {dy'}{dx}}}](../../e048e48b4896099ce600ae05c416987c7bf7c836.svg)

Thus

-

|

|

(Eq. 5.7.3)

|

Substituting (Eq. 5.7.3) into (Eq. 5.7.2) yields

-

![{\displaystyle \displaystyle (\phi _{xy}-\phi _{yx})+(\phi _{py}-\phi _{yp})y''+{\frac {d}{dx}}[(\phi _{px}-\phi _{xp})+(\phi _{py}-\phi _{yp})p]=0}](../../954785daf9d64fa633ef06735d1e2f761eec2278.svg) |

|

(Eq. 5.7.4)

|

Because

-

|

|

(Eq. 5.7.5)

|

Substitute (Eq. 5.7.5) into (Eq. 5.7.4), we have

-

|

|

(Eq. 5.7.6)

|

Since  and

and  can be the second and first derivative of any solution function

can be the second and first derivative of any solution function  of any second order ODE in terms of which the equation

of any second order ODE in terms of which the equation  is hold. That is, the factor

is hold. That is, the factor  , which consists of two derivatives of solution function and the derivative operater so that depends partly on the solution functin of ODE, can be arbitrary and thus linearly indepent of the derivative operater

, which consists of two derivatives of solution function and the derivative operater so that depends partly on the solution functin of ODE, can be arbitrary and thus linearly indepent of the derivative operater  , which is a factor of the third term on left hand side of (Eq. 5.7.6).

, which is a factor of the third term on left hand side of (Eq. 5.7.6).

Similarly, comparing the first and the third terms on left hand side of (Eq. 5.7.6) yields that the factor 1 (which can be treated as a unit nature number basis of function space) of the first term and the derivative operater (which is another basis of derivative function space) of the third term are linearly independent of each other.

For the left side of (Eq. 5.7.6) being zero under any circumstances, we should have,

-

|

|

(Eq. 5.7.7)

|

while

-

|

|

(Eq. 5.7.8)

|

-

|

|

(Eq. 5.7.9)

|

From (Eq. 5.7.7),since the factor  is arbitrary, we obtain,

is arbitrary, we obtain,

-

|

|

(Eq. 5.7.10)

|

Thus,

-

|

|

(Eq. 5.7.11)

|

From(Eq. 5.7.9), consider  to be also a function of variables x,y and p, which can be represented as

to be also a function of variables x,y and p, which can be represented as  , thus,

, thus,

-

|

|

(Eq. 5.7.12)

|

Since the partial derivative opraters  are linearly independent, we have,

are linearly independent, we have,

-

|

|

(Eq. 5.7.13)

|

-

|

|

(Eq. 5.7.14)

|

-

|

|

(Eq. 5.7.15)

|

Obviously the only condition by which the three equations above are all satisfied is that the function  is a numerical constant.

is a numerical constant.

Thus, we have

-

|

|

(Eq. 5.7.16)

|

where  is a constant. To find the value of constant

is a constant. To find the value of constant  , try the process as follow.

, try the process as follow.

-

|

|

(Eq. 5.7.17)

|

Find integral on both sides of (Eq. 5.7.17) in terms of x,

-

|

|

(Eq. 5.7.18)

|

where the term  is an arbitrarily selected function of independent variables y and p. Then find integral on both sides of (Eq. 5.7.18) in terms of p,

is an arbitrarily selected function of independent variables y and p. Then find integral on both sides of (Eq. 5.7.18) in terms of p,

-

|

|

(Eq. 5.7.19)

|

where the term  is an arbitrarily selected function of variables x and y.

is an arbitrarily selected function of variables x and y.

The first partial derivatives of both sides of (Eq. 5.7.19) in terms of x could be

-

![{\displaystyle \displaystyle \phi _{x}=\int \phi _{xp}dp+Cp+{\frac {\partial }{\partial x}}[\int f(y,p)p'dx+g(x,y)]}](../../ec85a02356bb3229c466b12bb61d5cf07dfdd380.svg) |

|

(Eq. 5.7.20)

|

-

|

|

(Eq. 5.7.21)

|

Then find partial derivative of both sides of (Eq. 5.7.21) in terms of p,

-

![{\displaystyle \displaystyle \phi _{xp}=\phi _{xp}+C+{\frac {\partial }{\partial p}}[f(y,p)p']}](../../35f0f0f56204dead3b905d63d34a6facc07ab302.svg) |

|

(Eq. 5.7.22)

|

-

![{\displaystyle \displaystyle \Rightarrow C+{\frac {\partial }{\partial p}}[f(y,p)]p'=0}](../../96f382449b7aed1de54981b35acec5ae32a3819d.svg) |

|

(Eq. 5.7.23)

|

-

![{\displaystyle \displaystyle \Rightarrow {\frac {C}{p'}}=-{\frac {\partial }{\partial p}}[f(y,p)]}](../../559e8d05f98c8ab6639623491dffefbc62a530ff.svg) |

|

(Eq. 5.7.24)

|

Because the right hand side of (Eq. 5.7.24) is a function of two variables y and p, while the left hand side is a function of p' only, the equation (Eq. 5.7.24) could not hold if the constant  has a non-zero value. Thus, the only condition by which the equation (Eq. 5.7.24) will be satisfied is that

has a non-zero value. Thus, the only condition by which the equation (Eq. 5.7.24) will be satisfied is that  while

while ![{\displaystyle {\frac {\partial }{\partial p}}[f(y,p)]=0}](../../da1d55d51ee1017ab9ae7549366095d2b45f98da.svg) ,that is,

,that is,  .

.

Substituting  into (Eq. 5.7.16) yields,

into (Eq. 5.7.16) yields,

-

|

|

(Eq. 5.7.25)

|

Thus we have

-

|

|

(Eq. 5.7.26)

|

We are now left with

Thus

-

|

|

(Eq. 5.7.27)

|

R*5.8. Working with the coefficients in 1st exactness condition

On our honor, we did this problem on our own, without looking at the solutions in previous semesters or other online solutions.

Given

-

|

|

(Eq.(1) p.22-2)

|

Using The Coefficients in the 1st exactness condition prove that (Eq.(1)p.22-3) can be written in the form

|

Solution

Nomenclature

|

|

For an equation to be exact, they must be of the form

-

|

|

(Eq. 5.8.2)

|

|

|

|

|

|

using chain and product rule

-

|

|

(Eq. 5.8.3)

|

|

|

|

|

|

|

-

|

|

(Eq. 5.8.4)

|

|

|

plugging Eq(2),(3),&(40 into Eq(1)

![{\displaystyle \displaystyle f_{y}q+g_{y}-[f_{xp}q+f_{yp}pq+f_{pp}q^{2}+g_{px}+g_{py}p+g_{pp}q]+f_{xx}+f_{xy}p+f_{yx}q+f_{yx}p+f_{yy}p^{2}+f_{yp}qp+f_{xp}q+f_{py}pq+f_{pp}q^{2}=0}](../../636c3d736a3f978dae061c76b4e5cd73c59bf527.svg)

|

after cancellation of the opposite term

|

Now, we can club the terms

and

Since 1 and q, i.e the second derivative of y, are in general non linear, for the equation to hold true, their coefficients must both be equal to zero.

Thus we say that

and

Which is the required proof.

R5.9: Use of MacLaurin Series

On our honor, we did this problem on our own, without looking at the solutions in previous semesters or other online solutions.

Problem

Use Taylor Series at x=0 (MacLaurin Series) to derive[13]

Solution

The Taylor's series [14] expansion of a function f(x) about a real or complex number c is given by the formula

-

|

|

(Eq. 5.9.1)

|

When the neighborhood for the expansion is zero, i.e c = 0, the resulting series is called the Maclaurin Series.

Part a

We have the function

Rewriting the Maclaurin series expansion,

-

|

|

(Eq. 5.9.2)

|

Substituting the values from the tables in (Eq. 5.9.2) we get

-

|

|

(Eq. 5.9.3)

|

-

|

|

(Eq. 5.9.4)

|

Where[15]

We can represent

(Eq. 5.9.4) can be written as  , hence proved.

, hence proved.

Part b

We have the function

We will use a slightly different approach here when compared to part a of the solution. We will expand  and multiply the resulting expanded function with

and multiply the resulting expanded function with

Rewriting the Maclaurin series expansion,

-

|

|

(Eq. 5.9.5)

|

Substituting the values from the tables in (Eq. 5.9.5) we get

-

|

|

(Eq. 5.9.6)

|

Multiplying (Eq. 5.9.6) with

This expression does not match the expression that we have been asked to prove. This, we believe is because there has been a misprint and the expression to be found out must be

Expanding  using Maclaurin's series

using Maclaurin's series

Rewriting the Maclaurin series expansion,

-

|

|

(Eq. 5.9.7)

|

Substituting the values from the tables in (Eq. 5.9.7) we get

-

|

|

(Eq. 5.9.8)

|

Multiplying (Eq. 5.9.8) with

-

|

|

(Eq. 5.9.9)

|

Which is the expression in the RHS.

R5.10 Gauss Hypergeometric Series[16]

On our honor, we did this problem on our own, without looking at the solutions in previous semesters or other online solutions.

Problem

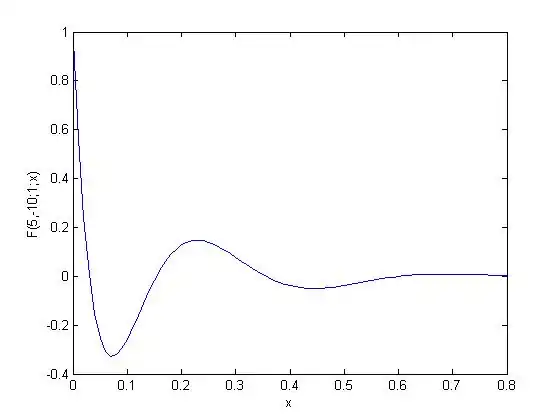

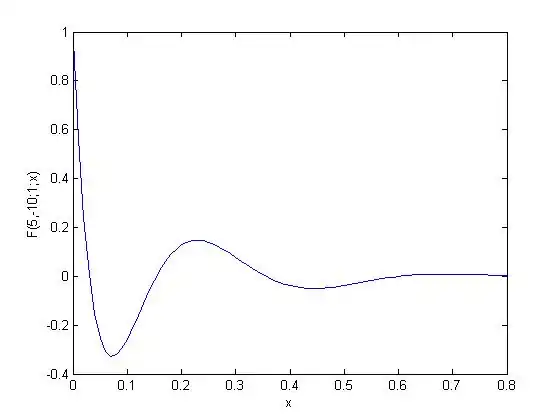

1. Use MATLAB to plot  near x=0 to show the local maximum (or maxima) in this region.

near x=0 to show the local maximum (or maxima) in this region.

2. Show that  |

|

((1) pg. 64-9b)

|

Solution

The MATLAB code, shown below, will plot the hypergeometric function  over the interval:

over the interval:  .

.

x = [0:0.01:0.8]';

plot(x,hypergeom([5,-10],1,x))

The plot of the hypergeometric function near x=0 reveals a local maximum of 0.1481 at x = 0.23.

The hypergeometric function  can be expressed as

can be expressed as  using the Pochhammer Symbol

using the Pochhammer Symbol

-

where  |

|

(Eq. 5.10.1)

|

Here  ,

,  and

and  .

.

The hypergeometric series represented by  terminates after the 11th term because the constant b = -10. This is because starting with the 12th term in the series the factor

terminates after the 11th term because the constant b = -10. This is because starting with the 12th term in the series the factor  appears in the numerator.

appears in the numerator.

For the 12th term in the series k = 11, so

The hypergeometric series represented by the function  can be written in expanded form:

can be written in expanded form:

-

|

|

(Eq. 5.10.2)

|

If the expansion of  agrees with (Eq. 5.10.2) then it is a valid representation of the hypergeometric function.

agrees with (Eq. 5.10.2) then it is a valid representation of the hypergeometric function.

-

|

|

(Eq. 5.10.3)

|

Combining all like terms yields the following:

The expansion of (Eq. 5.10.3) agrees with the expanded form of the hypergeometric function (Eq. 5.10.2), which confirms that ((1) pg. 64-9b) is true.

R 5.11 Calculation of Time Taken by a projectile to hit the Ground

On our honor, we did this problem on our own, without looking at the solutions in previous semesters or other online solutions.

Given

-

|

|

(Eq.(1)p.63-9)

|

Where  is a Hypergeometric Function.

is a Hypergeometric Function.

Consider the integral in (3) Pg.63-8 and (Eq.(1)Pg.63-9)

-

|

|

(Eq.5.11.1)

|

Let n=3, a=2 and b=10

For each value of time (t), solve for altitude z(t), plot z(t) vs t, and find the time when projectile returns to ground.

Solution

The given integral is a reduced form of the integral (3) Pg 63.8 which relates the mass of a projectile, the forces acting upon it when moving in air ( the air resistance, which is a function of its height in air, and its own weight) and the time taken for the projectile to reach the ground. Thus it represents a real world problem whose solution must actually exist.

We have been given the values of n, a and b. Substituting the values in (Eq.5.11.1), we get:

The solution of the above Hypergeometric function contains complex terms according to Wolfram Alpha [18] which does not seem to make sense as the function represents a real world problem with real numbers.

When expanded, this is a series that goes to infinity as there is no negative term in the hypergeometric function which will make one of the terms go to zero. This computation is beyond our ability. Hence the problem could not be solved.

R5.12: Hypergeometric Function

On our honor, we did this problem on our own, without looking at the solutions in previous semesters or other online solutions.

Problem

1. Is (1)p.64-10 exact?

2. Is (1)p.64-10 in the power form of (3) p.21-1?

3. Verify that F(a,b;c;x) is indeed a solution of (1) p.64-10.

Given

-

![{\displaystyle \displaystyle x(1-x)y''+[c-(a+b+1)x]y'-aby=0}](../../8fc097c1458f756215310b9f02f39267f3ce28cd.svg) |

|

((1)p.64-10)

|

-

|

|

((2)p.16-4)

|

-

|

|

((3)p.16-4)

|

-

|

|

((4)p.16-4)

|

-

|

|

((2)p.7-3)

|

-

|

|

((1)p.16-5)

|

-

|

|

((2)p.16-5)

|

-

|

|

((3)p.21-1)

|

Solution

1. In order for (1) p.64-10 to be exact, it must first be in the form of (2)p.16-4, with g and f defined in (3)-(4) p.16-4, as seen below.

-

![{\displaystyle \displaystyle \underbrace {x(1-x)} _{\displaystyle \color {blue}{f(x,y,p)}}y''+\underbrace {[c-(a+b+1)x]y'-aby} _{\displaystyle \color {blue}{g(x,y,p)}}=0}](../../fe1ad5bb524e2ec66580c525ab13a7c1de6d7495.svg) |

|

(Eq. 12.1)

|

Therefore, the first exactness condition is satisfied.

In order to satisfy the second exactness condition, the following derivatives must be found.

-

![{\displaystyle \displaystyle g=[c-(a+b+1)x]y'-aby=[c-(a+b+1)x]p-aby}](../../098eb7c3469c86d2d4e545361e2514f05a9ccbb9.svg) |

|

(Eq. 12.2)

|

-

|

|

(Eq. 12.3)

|

-

|

|

(Eq. 12.4)

|

-

|

|

(Eq. 12.5)

|

-

|

|

(Eq. 12.6)

|

-

|

|

(Eq. 12.7)

|

-

|

|

(Eq. 12.8)

|

-

|

|

(Eq. 12.9)

|

-

|

|

(Eq. 12.10)

|

-

|

|

(Eq. 12.11)

|

-

|

|

(Eq. 12.12)

|

-

|

|

(Eq. 12.13)

|

-

|

|

(Eq. 12.14)

|

-

|

|

(Eq. 12.15)

|

-

|

|

(Eq. 12.16)

|

By substituting into the 1st relation, (1) p.16-5:

-

|

|

(Eq. 12.17)

|

-

|

|

(Eq. 12.18)

|

This is not true for all values of a and b, so the 1st relation is not valid.

By substituting into the 2nd relation, (2) p.16-5:

-

|

|

(Eq. 12.19)

|

-

|

|

(Eq. 12.20)

|

This is true, so the 2nd relation is valid.

One of the relations is not valid, therefore the second exactness condition is not satisfied.

Hence, (1) p.64-10 is not exact.

2. The following equalities must be true for (1) p.64-10 to be in power form of (3) p.21-1.

-

|

|

(Eq. 12.21)

|

-

|

|

(Eq. 12.22)

|

-

|

|

(Eq. 12.23)

|

Since there are no values of  that make these equalities true, then (1) p.64-10 is not in power form.

that make these equalities true, then (1) p.64-10 is not in power form.

3. In order to verify that F(a,b;c;x) is a solution of (1) p.64-10, we select the example of  .

.

-

|

|

(Eq. 12.24)

|

Next, the first and second derivatives of y must be found.

-

|

|

(Eq. 12.25)

|

-

|

|

(Eq. 12.26)

|

Substituting into (1) p.64-10:

-

|

|

(Eq. 12.27)

|

-

|

|

(Eq. 12.28)

|

-

|

|

(Eq. 12.29)

|

-

|

|

(Eq. 12.30)

|

-

|

|

(Eq. 12.31)

|

This equation is valid, therefore, F(a,b;c;x) is a solution for (1) p.64-10.

R*5.13 Exactness of Legendre and Hermite equations [19]

On our honor, we did this problem on our own, without looking at the solutions in previous semesters or other online solutions.

Given

Given

Legendre equation:

-

|

|

(Eq. 5.13.1)

|

Hermite equation:

-

|

|

(Eq. 5.13.2)

|

Problem

1. Verify the exactness of the Legendre (Eq. 5.13.1) and Hermite (Eq. 5.13.2) equations.

2. If Hermite equation is not exact, check whether it is in power form, and see whether it can be made exact using IFM with  .

.

3. The first few Hermite polynomials are:

Verify that these are homogeneous solutions to the Hermite differential equation (Eq. 5.13.2).

Solution

Nomenclature

Exactness of Legendre equation

To satisfy the first exactness condition, the Legendre equation (Eq. 5.13.1) should be of the form:

-

|

|

(Eq. 5.13.3)

|

Hence (Eq. 5.13.1) satisfies the first exactness condition.

The second exactness condition can be checked in two ways.

Method 1

The second exactness condition is satisfied if (Eq. 5.13.1) satisfies (Eq. 5.13.4) and (Eq. 5.13.5):

-

|

|

(Eq. 5.13.4)

|

-

|

|

(Eq. 5.13.5)

|

Computing derivatives,

|

|

Substituting these into (Eq. 5.13.4) and (Eq. 5.13.5),

(Eq. 5.13.4)  .

.

(Eq. 5.13.5)  .

.

Hence, the second exactness condition is satisfied when  or

or  .

.

Method 2

The second exacness condition is met if (Eq. 5.13.1) satisfies:

-

|

|

(Eq. 5.13.6)

|

where

Computing the derivatives,

|

|

Substituting these in (Eq. 5.13.6) yields,

Again we see that the second exactness condition is satisfied when  or

or  .

.

Exactness of Hermite equation

To satisfy the first exactness condition, the Hermite equation (Eq. 5.13.2) should be of the form (Eq. 5.13.3):

Hence (Eq. 5.13.2) satisfies the first exactness condition.

The second exactness condition can be checked in two ways.

Method 1

The second exactness condition is satisfied if (Eq. 5.13.2) satisfies (Eq. 5.13.4) and (Eq. 5.13.5):

Computing the derivatives,

|

|

Substituting these into (Eq. 5.13.4) and (Eq. 5.13.5),

(Eq. 5.13.4)  .

.

(Eq. 5.13.5)  .

.

Hence, the second exactness condition is satisfied only when  This is a necessary condition.

This is a necessary condition.

Method 2

The second exacness condition is met if (Eq. 5.13.2) satisfies (Eq. 5.13.6).

Computing the derivatives,

|

|

Substituting these in (Eq. 5.13.6) yields,

The second exactness condition is satisfied only when  .

.

We have seen that the Hermite equation (Eq. 5.13.2) is not exact when  .

.

The power form of L2-ODE-VC is

-

|

|

(Eq. 5.13.7)

|

Comparing (Eq. 5.13.2) with (Eq. 5.13.7), we can see that the Hermite equation is of the power form with:

Hence, we can consider an integrating factor which is in power form,

Replacing the 'n' term in (Eq. 5.13.2) with  to avoid confusion, we need to find

to avoid confusion, we need to find  , such that the following N2-ODE is exact:

, such that the following N2-ODE is exact:

-

|

|

(Eq. 5.13.8)

|

The Hermite equation (Eq. 5.13.2) can be written as:

-

|

|

(Eq. 5.13.9)

|

(Eq. 5.13.9) should satisfy (Eq. 5.13.4) and (Eq. 5.13.5) to meet the second exactness condition.

Computing the derivatives,

|

|

Substituting the derivatives in (Eq. 5.13.4) and (Eq. 5.13.5),

(Eq. 5.13.4)

(Eq. 5.13.5)

We can see that the second exactness condition can be satisfied only when  .

When

.

When  ,

,

.

.

Hence we can say

Therefore,  is a solution.

is a solution.

Hence, (Eq. 5.13.2) can be made exact using the integrating factor

Verification of homogeneous solutions of the Hermite equation

Case 1

Substituting in (Eq. 5.13.2),

Case 2

Substituting in (Eq. 5.13.2),

Case 3

Substituting in (Eq. 5.13.2),

Hence the given first three Hermite polynomials are homogeneous solutions of the Hermite equation.

R*5.14 Expressions for X(x) [20]

On our honor, we did this problem on our own, without looking at the solutions in previous semesters or other online solutions.

Given

Given

-

|

|

(Eq. 5.14.1)

|

-

|

|

(Eq. 5.14.2)

|

Where

Problem

Find expressions for  in terms of

in terms of

Solution

By definition,

and

and

Hence, (Eq. 5.14.2) can be written as:

-

|

|

(Eq. 5.14.3)

|

where,

Hence, (Eq. 5.14.3) is a generic expression for X(x).

Contributing Members

Problem Contribution

|

| Problem number |

Solved by |

Typed by |

Proofread by

|

| R5.1 |

Ramchandra, Mohammed |

Ramchandra |

Linghan

|

| R5.2 |

Amrith, Fabian |

Amrith |

Ramchandra

|

| R5.3 |

Linghan, Sarah |

Linghan |

Amrith

|

| R5.4 |

Linghan, Rahul |

Linghan |

Fabian

|

| R*5.5 |

Rahul, Mohammed, Fabian |

Rahul |

Amrith

|

| R*5.6 |

Ramchandra, Amrith, Linghan |

Ramchandra |

Fabian

|

| R*5.7 |

Linghan, Sarah, Mohammed |

Linghan, Ramchandra |

Ramchandra

|

| R*5.8 |

Mohammed, Amrith |

Mohammed |

Rahul

|

| R5.9 |

Ramchandra, Rahul |

Ramchandra |

Sarah

|

| R5.10 |

Fabian, Amrith |

Fabian |

Ramchandra

|

| R5.11 |

Fabian, Ramchandra, Amrith |

Ramchandra |

----

|

| R5.12 |

Sarah, Fabian, Mohammed |

Sarah |

Amrith

|

| R*5.13 |

Rahul, Ramchandra, Sarah |

Rahul |

Mohammed

|

| R*5.14 |

Rahul, Sarah, Linghan |

Rahul |

Mohammed

|

References

- ↑ Lecture Notes Setion 20 Pg. 20-2b

- ↑ Lecture Notes Section 20 Pg 20-2b

- ↑ Lecture Notes Section 20 Pg 20-1

- ↑ Euler Formula

- ↑ Lecture Notes Section 21 Pg 21-5

- ↑ Lecture Notes Section 21 Pg 21-4

- ↑ Lecture Notes Section 21 Pg 21-5

- ↑ Lecture Notes Section 21 Pg 21-4

- ↑ Lecture Notes Section 21 Pg 21-6

- ↑ Lecture Notes Section 16

- ↑ Lecture Notes Section 22 Pg 22-4

- ↑ Lecture Notes Section 22 Pg 22-6

- ↑ Lecture Notes Section 64 Pg 64-7b

- ↑ Taylor Series Wiki

- ↑ Lecture Notes Section 64 Pg 64-4

- ↑ Lecture Notes Section 64 Pg 64-9b

- ↑ Lecture Notes Section 64 Pg. 64-9b

- ↑ Wolfram Alpha's Solution to Hypergeometric Function

- ↑ Lecture Notes Section 27 Pg 27-1, 27-2

- ↑ Lecture Notes Section 30 Pg 30-3